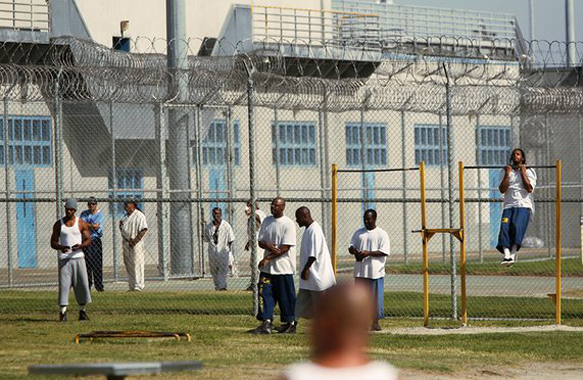

Photograph: Echo/Getty Images/Cultura RF

By Paul L. Thomas, Ed.D. | Originally Published at The Becoming Radical. May 5, 2014

The term “digital divide” is commonly used in education as a subset of the “achievement gap”—representing the inequity between impoverished and affluent students. Both terms, however, tend to keep the focus on observable or measurable outcomes, and thus, distract attention away from the inequity of opportunity that is likely the foundational source of those outcomes.

Currently, South Carolina appears poised to, yet again, make another standards shift—dumping the Common Core the state adopted just a few years ago—but in the coverage of that continuing debate, two points are worth highlighting: (1) “Computer testing allows for a better assessment of both students’ abilities and teachers’ effectiveness….,” and (2) “Democrats say the bill forces the Legislature to spend money on technology in classrooms….,” including “

As I have examined before, the educational advantages of technology are at best mixed, technology creates equity concerns as well as outcome disparities (efforts to close the digital divide in schools often increase the achievement gap), and investments in technology are by the nature of technology an endless commitment of precious taxpayers’ dollars.

Capitalism and consumerism are hidden in plain sight in the U.S. Consumerism has a symbiotic relationship with technology in the broader economy and a related symbiotic relationship among technology and the perpetually changing standards movement in education.

In the context of consumerism, then, that technology is perpetually upgrading and that standards (and related high-stakes testing) are perpetually changing are warranted and even necessary: New replaces old, to be replaced by the soon-to-come newer.

But when we shift the context to a pursuit of equity and teaching/learning, technology as a constantly moving (and therefore never finished) investment is exposed as a different sort of “digital divide”—the gap between the technology we have accumulated now and the technology upgrades we have committed to in the future (and mostly a commitment based on new without any mechanism for determining better).

One of the many problems with adopting, implementing, and testing Common Core is the included rush to invest in technology. That technology rush is also being highlighted with the rise in calls for computer-graded writing. [The perceived efficiency of such investments in technology are myopic, I argue, much as purchasing a Prius for the narrow gas savings that many consumers fail to place in the larger purchasing investment (see also).]

Let me offer here a few concerns about the technology gold rush—and argue that we should curtail significantly most of the technology investments being promoted for public schools because those investments are not fiscally or educationally sound:

- Cutting-edge technology has a market-inflated price tag that is fiscally irresponsible for tax-funded investments, especially in high-poverty schools where funds should be spent on greater priorities related to inequity.

- Technology often inhibits student learning since students are apt to abdicate their own understanding to the (perceived) efficiency of the technology. For example, students using bibliography generating programs (such as NoodleBib) consistently submit work with garbled bibliographies and absolutely no sense of citation format; they also are often angry at me and technology broadly once they are told the bibliographies are formatted improperly.

- Technology often encourages teachers/professors to abdicate their roles to the (perceived) effectiveness of technology. For example, many professors using Turnitin to monitor plagiarism are apt not to offer students instruction in proper citation and then simply punish students once the program designates the student work as plagiarized.

- Technology requires additional time for learning the technology (and then learning the upgrades)—time better spent on the primary learning experiences themselves.

- Computer-based testing may be a certain kind of efficient since students receive immediate feedback and computer programs can adapt questions to students as they answer, but neither of these advantages are necessarily advantages in terms of good pedagogy or assessment. Efficient? Yes. But efficiency doesn’t insure more important goals.

- In many cases, advocacy for increasing technology as well as the amount of programs, vendors, and hardware (all involving immediate and recurring investments of funds) is driven by a consumer mindset and not a pedagogical grounding. I have seen large and complex systems adopted that offer little to no advantage over more readily available and cheaper uses of technology; I can and do use a word processor program in conjunction with email in ways that are just as effective as more complex and expensive programs. As Perelam notes:

Whatever benefit current computer technology can provide emerging writers is already embodied in imperfect but useful word processors. Conversations with colleagues at MIT who know much more than I do about artificial intelligence has led me to Perelman’s Conjecture: People’s belief in the current adequacy of Automated Essay Scoring is proportional to the square of their intellectual distance from people who actually know what they are talking about.

Technology-for-technology’s sake is certainly central to the consumer economy in the U.S. and the world. It makes some simplistic economic sense that iPhones are in perpetual flux so that grabbing the next iPhone contributes in some perverse way to a consumer economy.

Education, however, both as a field and as a public institution should be (must be) shielded from that inefficient and ineffective digital divide—that gap between the technology we have now and how the technology we could have makes the current technology undesirable.

Especially if you are in public education and especially if you have been in the field 10 or 20 years, I urge you to take a casual accounting of the hardware and software scattered through your school and district that sit unused. Now consider the urgency and promise associated with all that when they were purchased.

It’s fool’s gold, in many ways, and the technology we must have to implement next-generation tests today will be in the same closed storage closets with the LaserDisks tomorrow.

The technology arms race benefits tech vendors, but not students, teachers, education, or society.

Computer-graded essays will not improve the teaching of writing and computer-based high-stakes testing will not enhance student learning or teacher quality.

But falling prey to calls for both will line someone’s pockets needlessly with tax dollars.

Technology has its place in education, of course, but currently, the rush to embrace technology is greatly distorted, driven by an equally misguided commitment to ever-changing standards and high-stakes tests.

Investing in new technology while children are experiencing food insecurity—as just one example—is inexcusable, especially when we have three decades of accountability, standards, testing, and technology investment that have proven impotent to address the equity hurdles facing schools and society.

Leave A Comment