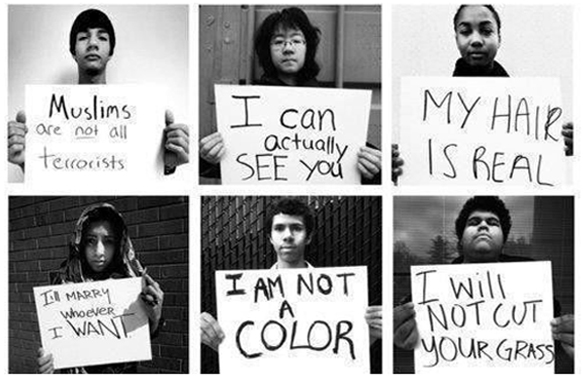

Image: Racial Stereotypes retrieved from tumblr

By Esther Quintero | Originally Published at Shaker Blog. April 4, 2014

This is the first in a series of three posts about implicit bias. Here are the second and third parts.

The research on implicit bias both fascinates and disturbs people. It’s pretty cool to realize that many everyday mental processes happen so quickly as to be imperceptible. But the fact that they are so automatic, and therefore outside of our conscious control, can be harder to stomach.

In other words, the invisible mental shortcuts that allow us to function can be quite problematic – and real barrier to social equality and fairness – in contexts where careful thinking and decision-making are necessary. Accumulating evidence reveals that “implicit biases” are linked to discriminatory outcomes ranging from the seemingly mundane, such as poorer quality interactions, to the highly consequential, such as constrained employment opportunities and a decreased likelihood of receiving life-saving emergency medical treatments.

Two excellent questions about implicit bias came up during our last Good Schools Seminar on “Creating Safe and Supportive Schools.”

- First, does one’s level of education moderate the magnitude of one’s implicit biases? In other words, wouldn’t it be reasonable to expect people with more education to have a broader repertoire of associations and, therefore, to be able to see beyond clichés and common places?

- Second, does the frequency of interaction with (and deep knowledge of) a person affect our reliance on stereotypic associations? Simply put, shouldn’t information that is individual-specific (e.g., Miguel loves Asimov novels) replace more generic information and associations (e.g., boys don’t like to read)?

The short (albeit simplified) answers are no and yes, respectively. Below, I elaborate on each, reflect on strategies that can help reduce the unintended ill effects of implicit biases, and touch on some implications for schools and educators.

Are More Educated People Less Biased?

What’s important here is our awareness of these associations that exist in our culture. It does not really matter that John has a Master’s degree or has traveled around the world. John could be the most knowledgeable person with the deepest egalitarian, non-essentialist beliefs – but what matters here is that John is also aware that most other people aren’t like him; that many others out there still believe that black men are more aggressive and more sexual or that women are more dependent, nurturing, and communal. Stereotypes operate implicitly (also here and here), regardless of our own race/gender, and even when our personal beliefs are completely to the contrary. In fact, many theorists have argued that implicit biases persist and are powerful determinants of behavior precisely because people lack personal awareness of them – meaning that they can occur despite conscious non-prejudiced attitudes and intentions.

So, to recap, it’s not primarily about education or knowledge, but perhaps a bit of the opposite: You would have to have lived under a rock all your life to claim true ignorance of the shared beliefs that exist in our society and that these beliefs don’t affect you in any way. At the risk of belaboring the point, let me also summarize three classic studies that explore the behavior of highly educated decision makers:

- In an audit study of employer hiring behavior, researchers Bertrand and Mullainathan (2003) sent out identical resumes to real employers, varying only the perceived race of the applicants by using names typically associated with African Americans or whites. The study found that the “white” applicants were called back approximately 50 percent more often than the identically qualified “black” applicants. The researchers found that employers who identified as “Equal Opportunity Employer” discriminated just as much as other employers.

- Steinpreis and colleagues (1999) examined whether university faculty would be influenced by the gender of the name on a CV when determining hireability and tenurability. Identical, fictitious CVs were submitted to real academics, varying only the gender of the applicants. Male and female faculty were significantly more likely to hire a potential male colleague than an equally qualified potential female colleague. In addition, they were more likely to positively evaluate the research, teaching, and service contributions of “male” job applicants than of “female” job applicants with the identical record. Faculty were four times as likely to write down cautionary comments when reviewing the CV of female candidates – comments included notes such as “we would have to see her job-talk”, or “I would have to see evidence that she had gotten these grants and publications on her own.”

- Finally, Trix and Psenka (2003) examined over 300 letters of recommendation for successful candidates for medical school faculty positions. Letters written for female applicants differed systematically from those written for male applicants. Letters for women were shorter, had more references to their personal lives and had more hedges, faint praise, and irrelevancies (e.g., “It’s amazing how much she’s accomplished.”, “She is close to my wife.”).

Does Familiarity Weaken Implicit Bias?

We rely on stereotypes (and other cognitive shortcuts) more heavily when there are more unknowns to a situation – for example, when we don’t know a person well, when we are unfamiliar with the goals of the interaction, when the criteria for judgment are unclear or subjective, etc. This suggests that the more information we have about a person or situation, the less likely we are to automatically fill in potential (knowledge) gaps with more “generic” information (e.g., stereotypes). In fact, “individuating” – or gathering very specific information about a person’s background, tastes etc. — has been proposed as an effective “de-biasing” strategy. When you get to know somebody, you are more likely to base your judgments on the particulars of that person than on blanket characteristics, such as the person’s age, race, or gender. This suggests that teachers may be better positioned than other professionals (e.g., doctors who see patients sporadically for a few minutes at a time) to overcome potential biases.

I am not suggesting that teachers, because they are teachers, are immune to implicit biases – in fact there is some research documenting that they are not (see Kirwan Institute’s recent review, pp. 30-35). However, teachers may be better situated to combat these biases than professionals in other fields. Getting to know their students well is part of a teacher’s job description. Thus, a potential intervention aimed at breaking stereotypic associations might build on and support this aspect of teachers’ work – for example, by providing a structured and systematic way to gather information on students during the first weeks of the new school year.

In addition, teachers are well positioned to actually DISRUPT CLASSROOM (STATUS) HIERARCHIES (which can emerge among students based on characteristics such as race, gender, academic ability). For example, by (authentically) praising a low status student on something specific that the student did well, the teacher can effectively raise the social standing of that student in the classroom. This, in turn, can elevate both the student’s confidence and self-assessment (i.e., what the student thinks he/she is capable of accomplishing) as well as his/her peers’ expectations (i.e., what other classmates think she/he is capable of). This is a powerful way of breaking stereotypic associations and equalizing learning conditions in the classroom.

In sum, formal education, in and of itself, is not enough to disrupt associations that are deeply embedded in the culture. We are all profoundly aware of these associations (even when we don’t share them) and for that reason alone, our thoughts and behaviors can be subtly and implicitly influenced by them. Individuation (or gathering information about the specific person in front of you) can, however, be an effective strategy to break automatic associations. This technique can help you see (or assign more weight to) the particulars of a person before you consider his/her age, class, gender, race, ethnicity, sexual orientation, etc.

In this respect, I noted that teachers may be better positioned than, say, doctors or judges, to individuate their students – and that this can facilitate more objective and less stereotypic judgments and more equitable classrooms. I also noted that there are strategies and tools that schools could take advantage of to support teachers’ natural desire to get to know their students well. In my next post, I will provide some additional ideas on how to do this, as well as more information on research-based strategies that have been shown to reduce implicit biases and what their implications might be for schools and educators.

~ Esther Quintero

Leave A Comment